Intelligent bin with Machine Learning capabilities

Team members

Marco Zucchi Mesia

Alejandro Delgado García

Joaquín Ferrer Núñez

Introduction and objectives

First of all, this assignment consisted in making a project that made practical use of what we learned about Arduino in class. This way, we would acquire skills in using electrical components in a real environment. Therefore, our group decided to craft a bin that would be able to detect different kinds of residues and depending on the type of residue, redirect it to the appropriate container. To achieve this, we used a Machine Learning Kit, kindly donated by Edge Impulse®, to train a neural network that would be able to identify different kinds of residues.

Proof of concept

Bill of Materials

| Material | Price per unit |

| Cardboard container | 5,49€ |

| Green spray paint | 3,89€ |

| Servomotor (2) | 2,52€ (5,04€) |

| Machine Learning Kit | Donated by Edge Impulse® |

| Arduino Uno R3 Kit | Provided by university |

| Total: | 14,42€ |

Assembly of the container

The container has two distinct ramps, one larger than the other one . The larger one (also the one at the bottom) moves the servos forward and backward while the smaller one (the one at the top) moves the servos right or left.

At the bottom of the container, we can find four dividers placed with a cross-pattern that separate the different specialized containers (each with the appropriate kind of residue). Around the container, one can find four gates dedicated to the four containers, each marked with the name of the residue and the number of the remote key that corresponds.

In the first image, the red circle indicates where the Machine Learning module with an OV7675 camera would be placed, in case we had succeded in loading the necessary library components to the Arduino Microcontroller. As we didn’t succeed in the Machine Learning recognition (due to reasons that will be explained later in this article), Image 1 and Image 2 correspond to the final placement of the electrical components of the project.

Circuit

In this section, we will explain how we built the electric circuit. The circuit has two servos connected to the Pulse-Width modulation pin 11 and pin 9. Moreover, these are also connected to 5V and to ground. On the other side, one can find the IR sensor placed on the protoboard. As with the servos, the IR sensor is also connected to 5V, to ground and to the Pulse-Width modulation pin 3.

In case we had succeded in using the ML capabilities of the ML Kit, we would have connected the two servos to the digital pins D12 and D11 of the shield.

Machine Learning

With respect to the Machine Learning part, this was the core part of the project and one of the parts that turned out harder to grasp. To aid with the programming side we counted on several libraries and development frameworks, that helped us get the image test and training datasets, and with the compression of the model into the embedded Arduino Nano®.

Here there is a list of the libraries and frameworks used in the project:

- JMD Imagescraper repository

- Tensorflow Lite and Tensorflow Lite Micro

- Arduino IDE

- Jetbrains Pycharm Professional

The first repository had a few scripts that simplified the dataset acquisition process by tagging and dowloading images based on a Duckduckgo search. The second bullet point makes reference to an open-source framework developed by Google that allows us to train neural network models (Tensorflow) and shrink them to minimal sizes for them to adapt to the microcontrollers limitations (both in RAM and flash storage). Lastly, we used two IDEs to help with code assistance and interaction with the Arduino microcontroller and sensors attached to the Arduino. For Arduino interaction, we used the Arduino IDE with a C-style programming language and for training the neural network we used the Jetbrains Pycharm® Professional IDE with Python.

As a final note in this introduction, it is worth noting that most of the ML know-how and the deployment of the ML model in the Arduino platform was given thanks to Harvard Machine Learning Kit courses: Course 1, Course 2 and Course 3

In the next section, we will proceed to explain the different phases inside the Machine Learning side of hte project:

- Obtaining of the training, validation and evaluation image datasets for the different kinds of residues

- Training of the ML model itself

- Compression of the ML model to adapt to the microcontroller physical limitations

Dataset generation

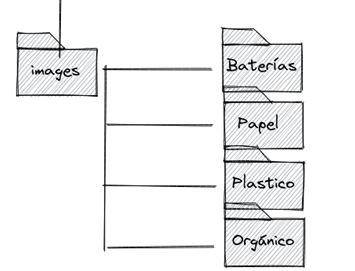

In order for the neural network to learn, it needs a tagged dataset according to the different kinds of residues we want to classify. As mentioned before, we used the JMD imagescraper script that allows us to obtain images from Duckduckgo using keywords. The directory structure used for the dataset storing is the following:

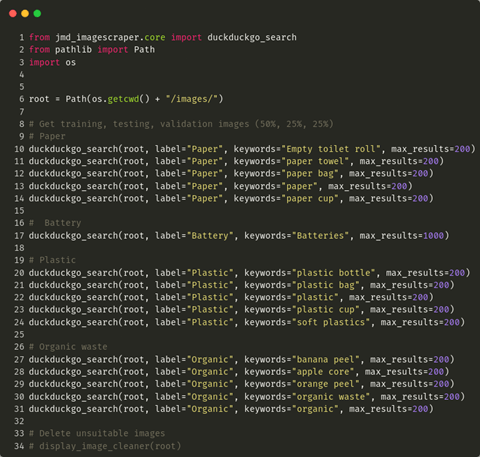

In each of the sub-folders, one can find the different images that will be fed into the ML model in each of the training, validation and evaluation stages. The final script lays as follows:

Model Training

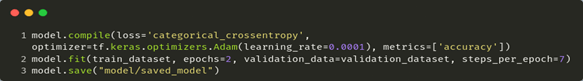

The training of the model has been done with Tensorflow in Python, and with a full-fledged computer.

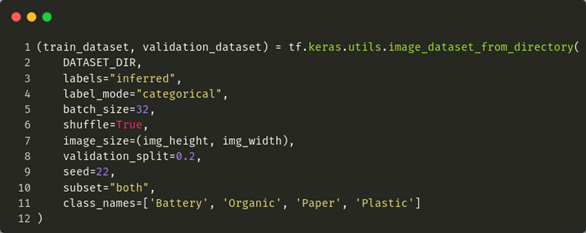

First of all, the dataset had to be loaded from the folder, specifiying a training dataset and a validation dataset (used to check whether the neural network was learning «correctly»)

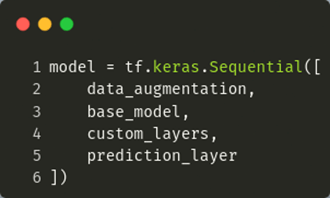

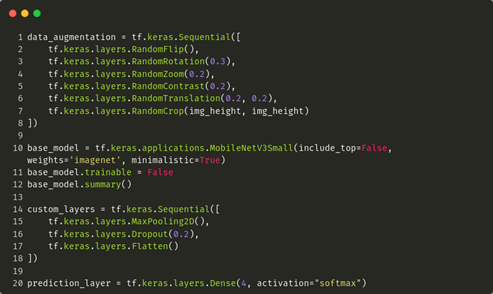

Then, we designed the architecture of the neural network. The neural network was comprised of four high-level layers: the augmentation layer (that expands the current dataset with zoom, rotation, crop, etc. modifications on the original dataset), the pre-trained model, some custom layers for tailoring our neural network to our specific use-case and, last of all, the prediction layer.

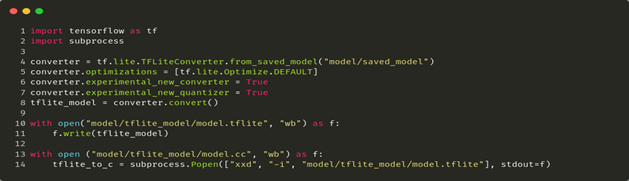

Compression of the ML model

In this phase, the ML model has been compressed with certain optimization techniques in order to shrink the size and RAM requirements of the model. In order to achieve this in the Pycharm IDE, first, we converted the model into a tflite format; and, second, to a C-style array that would be directly put into the Arduino Nano. The code to achieve this, is shown in the following image:

Arduino

The Arduino code is very extense, but in terms of the model compression, there are several specialized techniques that allow for further reduction of the size of the model. These techniques are found inside the Tensorflow Lite Micro («for microcontrollers») framework, and basically add only the strictly needed operations of the Tensorflow library (as the «standard» library has thousands of basic operations, which are, on most cases, not needed)

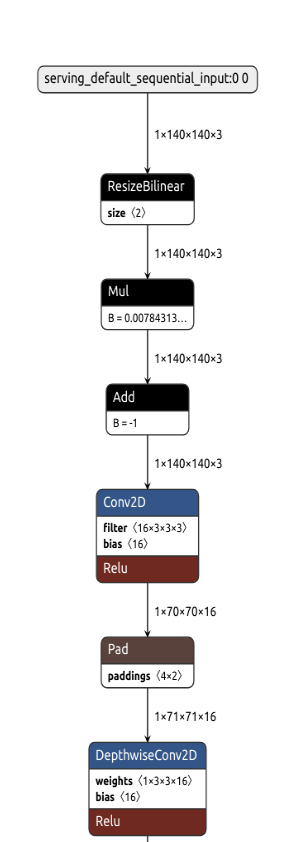

To determine, which operations our ML model used, we used a layer-visualization website called Netron. In the following screenshot, you can find which operations our ML model used:

In the following code snippet, it is shown how we are instructing Tensorflow Lite Micro to include only the strictly needed operations for our model. This is done by an interpreter, on the low-level, modifies model instructions, without having to recompile the code and just adding a negligible latency (neglegible due to the numerous iterations/epochs of the model training phase)

Arduino code

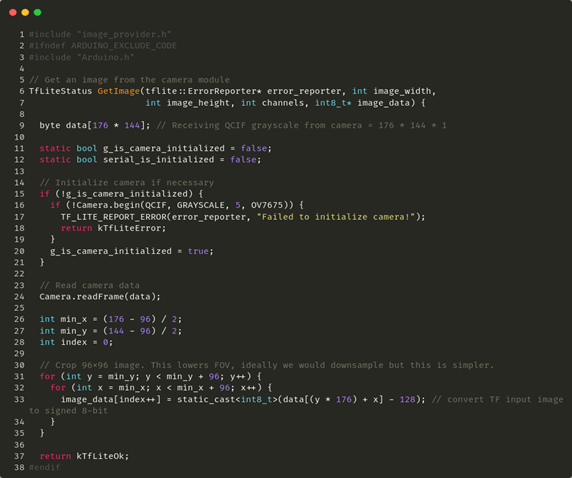

OV7675 Camera

The Camera capturing-process was done using the example code provided in the library documentation, and resulted in the following code snippet:

Due to the lenght and size of the Arduino code, we decided not to include all the files into this blog post, but you can find the code in the following link:

https://gist.github.com/marcozucchi/24256f1e4142c39c3fad6f1333f7441e

Issues during the engineering process and proposed solutions

In terms of difficulties, we had several. First of all, difficulties arose when planning the assembly of the project. We thought of a few different structures like two sliding platforms in cross that would redirect the residues to the appropriate container, but posed issues with how we would attach the sliders to the sides of the container (as one of the goals of the project was to make it as low-cost as possible).

After discarding several options, we decided that the most cost-effective solution was two platforms that rotated depending on the kind of residue.

After that engineering decision, we encountered the problem that some objects would be able to bounce off the second platform, due to its reduced dimension, and thus a residue would fall into the wrong container. The solution proposed to this issue, was to make both platforms rotate at the same time, in this way reducing the bouncing-off probability.

Furthermore, we had to think a way for both platforms not to bump with each other when rotating, so we had to fine-tune the angle both platforms would rotate up to. Then, we found that a servo didn’t rotate correcly due to a support platform on the other side, so a screw had to be added to help the servos rotate.

Finally, we also encountered challenged with the Machine Learning process. First of all, we used the Harvard course library, but it turned out that that library wasn’t updated with the latest Tensorflow Lite Micro operations, so we weren’t able to deploy the ML model fully. The updated library we should have used (if we had found the solution earlier) can be downloaded here.

Conclusion and future improvements

This project allowed us to get acquainted with the ML world from scratch, as well as its deployment in a resource-constrained environment such as Arduino Nano. This project can be further expanded and brought to a commercial sphere making the camera detection more precise, using servos with a greater torque, or making the containers larger. The ML model could be further improved to get a greater precision in recognising the different residues.